How can we evaluate narrative change?

Background

JUMA is a small, dynamic association for young Muslims who want to have a say in and contribute to German society. Part of their vision is to bring the perspectives of young Muslims into social debates. Participating in ICPA’s Narrative Change Lab, JUMA members saw the importance of reaching out to audiences that are skeptical about migration, integration and Islam, to help rebalance these heated public debates by sharing positive and authentic stories.

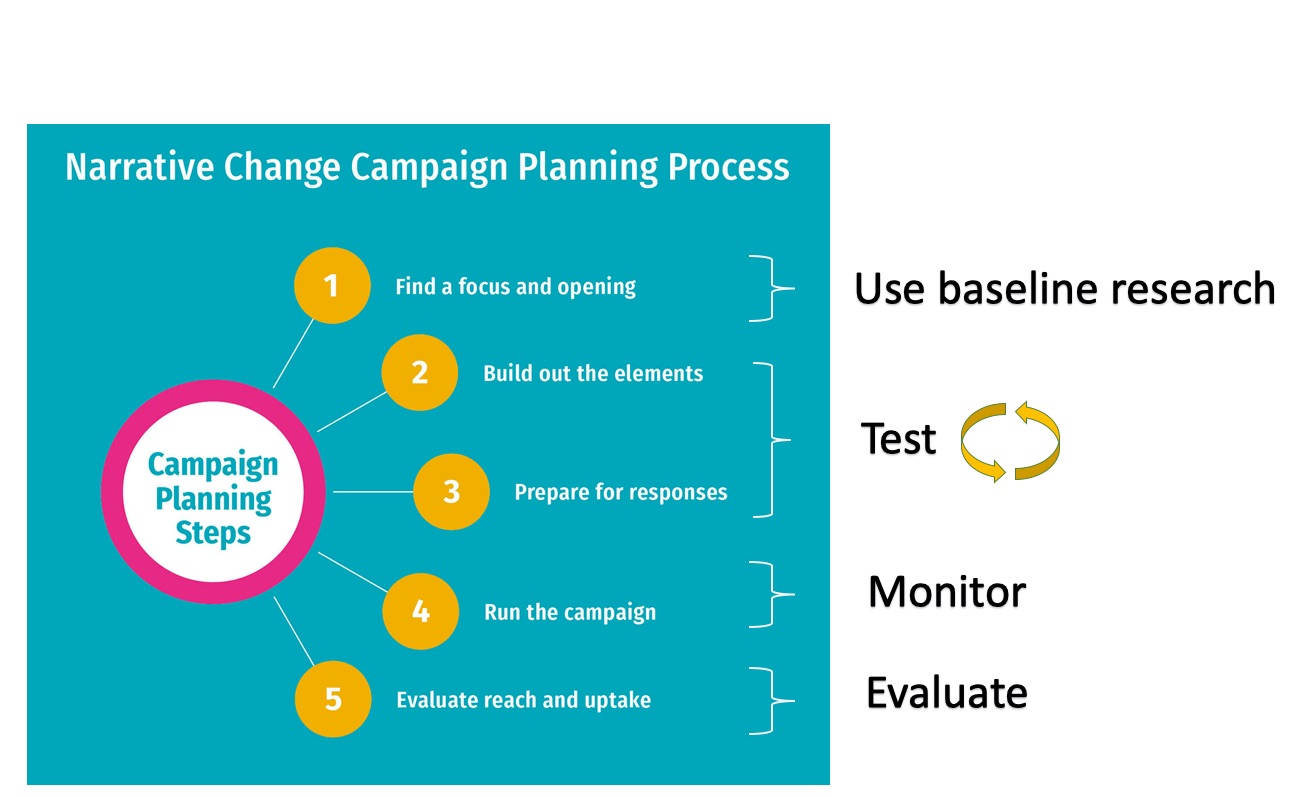

Through the Lab, and based on baseline research, the JUMA team developed a strategy for engaging with so-called ‘movable middle’ audiences, who are neither pro nor anti, but feel conflicted and have doubts. Focus group testing during the campaign development process helped them narrow the focus of their 2019 “Together Human” campaign to reach ‘economic pragmatists’, who are positive about the contribution diversity makes to Germany’s culture and economy, but unsure whether integration is possible and critical towards Islam and Muslims (see More in Common’s 2017 research). This would be a pilot campaign, to see if narrative change campaigning to the movable middle can work in Germany (with most of the exemplary work coming from the US and UK). For a full account of the development and testing of JUMA’s campaign, see this case study by our partner Social Change Initiative (SCI).

In 2019 JUMA built out their campaign. The team created videos, posters and messages which they launched in November 2019. ICPA provided advice and mentoring on creative development (more on this process here), talking points and monitoring & evaluation.

JUMA initially hoped to appoint a team member to be responsible for media partnerships but due to limited capacity decided to focus their energy on the production of high-quality creative outputs. This is one of the limitations of the pilot – that it did not target media coverage and consequently couldn’t expect wider uptake among politicians or opinion leaders. JUMA did, however, aim to reach economic pragmatists with a message that resonated and led to positive opinion shifts. Assessing the extent to which this occurred therefore became one of the major goals of the evaluation, while tracking media response and uptake was more secondary (but equally important for learning lessons).

In this blogpost we outline the steps we took when advising JUMA on their evaluation and share some of the lessons we learned, with the aim of supporting other activists to also evaluate their campaigns.

Steps for narrative change campaign evaluation

Step 1: Be clear on campaign targets

Using the evaluation framework in the Reframing Migration Toolkit, we worked with JUMA to define campaign targets at three levels: reach, response and uptake.

Here's what we mean by that:

| Evaluation level | Definition | JUMA’s target |

|---|---|---|

| Reach | Reaching your target audience | To reach economic pragmatists, as defined by More in Common’s 2017 German study, in the 3 cities of Berlin, Stuttgart and Leipzig. |

| Response | Achieving the response you want from the target audience and in the media |

To reinforce or ‘turn up’ the positive aspects of economic pragmatists’ opinions on migration and Muslims, and ‘turn down’ or reduce their anxieties about Islam and integration, creating a warm, positive response. JUMA recognised the value of media coverage for reaching their target audience but did not have capacity to focus on this in 2019. |

| Uptake | Getting your messages/narrative adopted in the wider public debate and policy positions | The long-term aim for JUMA is a more positive policy making environment on issues of migration and integration. As this was a pilot campaign, however, there was not a specific political or policy goal. |

Knowing your campaign targets allows you to identify the best methods to assess whether they are met, and to set metrics and benchmarks for success.

Step 2: Select the methods

Working from the targets above, we identified data sources that would allow the JUMA team to track progress on these targets during campaign delivery (monitoring) and reflect on the overall success of the campaign afterwards (evaluation). Weighing up the costs and benefits in terms of time, money and data quality of different methods, we worked with JUMA to select the methods.

These were the core methods chosen for monitoring & evaluation:

| Evaluation level | Method | Purpose |

|---|---|---|

| Reach and Response |

Social media monitoring – via back-end social media analytics/insights and with help from the platform Keyhole. |

To track key social media metrics – reach, engagement and sentiment of reactions and comments. |

| Response – opinion shift |

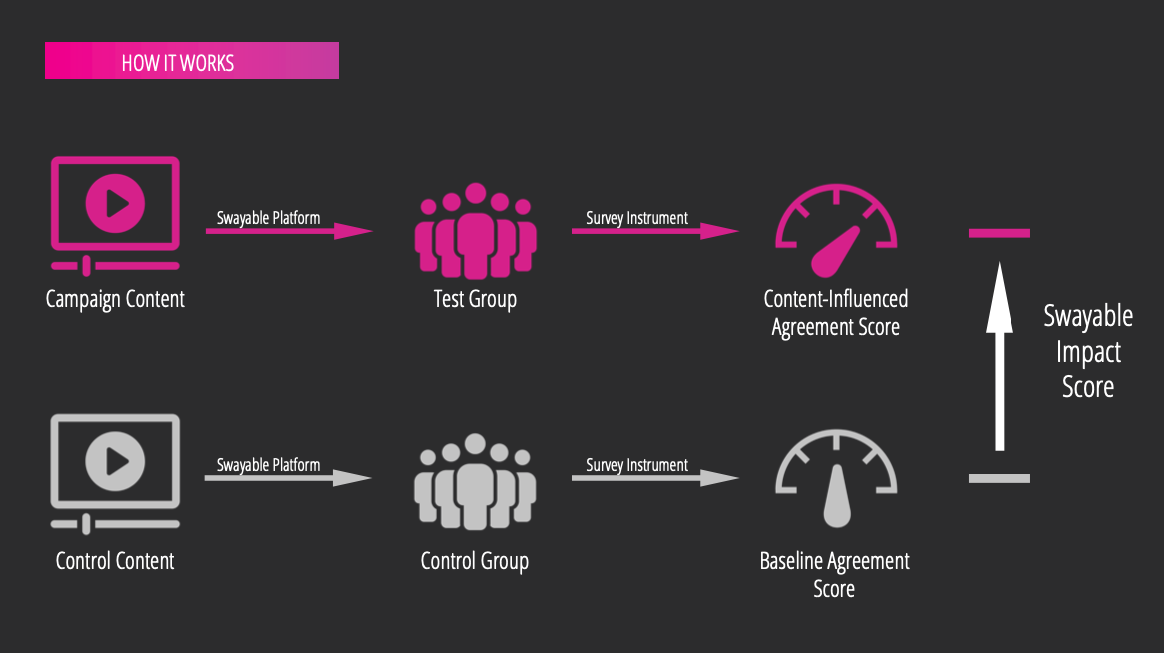

Randomised Controlled Test of campaign videos – run in collaboration with the platform Swayable before the campaign went live. |

To test and measure the impact of the campaign video content on opinions, by comparing the opinion shift in a test audience (who saw JUMA’s videos) with a control audience. |

| Response – media |

Media monitoring – done manually by the team in a monitoring log. |

To log media coverage achieved by the campaign in terms of article/broadcast reach, length and sentiment. |

| Response – media |

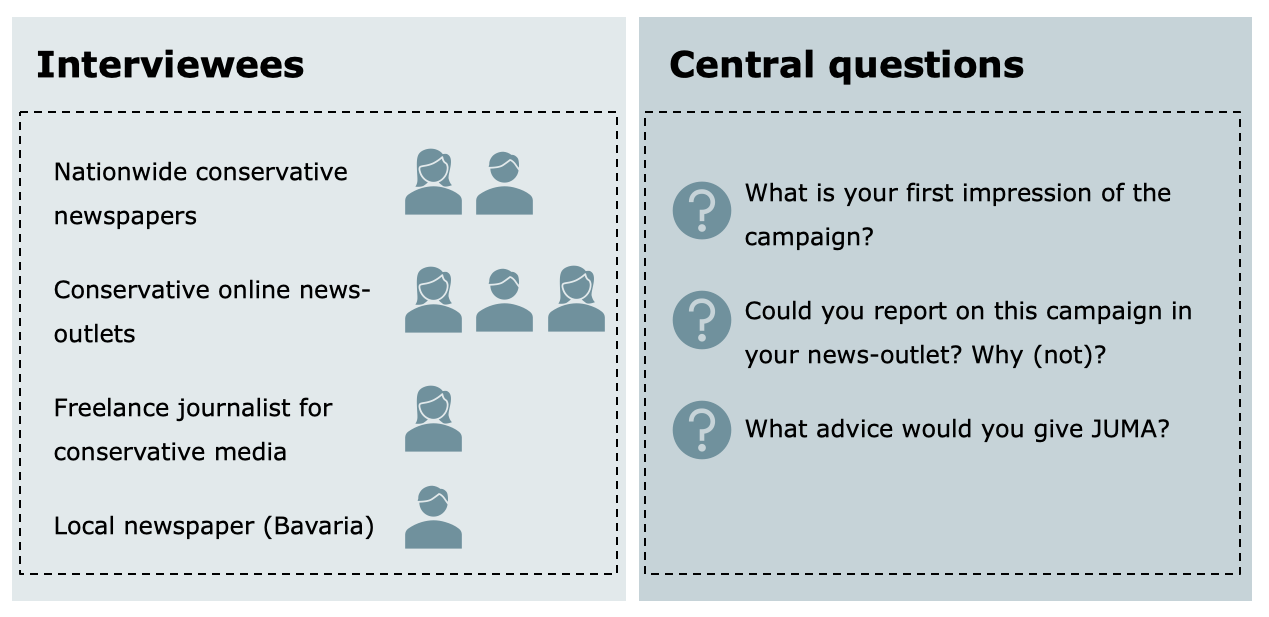

Telephone interviews with journalists – conducted by Syspons after the campaign. |

To explore the extent to which journalists from media publications trusted by the target audience considered the campaign to be newsworthy. We added this method to ensure we could still learn about pitching and packaging campaigns for the media despite JUMA not having capacity to focus on this in the pilot. |

| Uptake |

Case study collection – manual collection by the team in a log. |

To track and assess whether influencers (e.g. opinion leaders and politicians) started using the campaign’s messages, stories and slogans. |

You may notice that one of the methods included in our evaluation was actually also a testing method. We used a Randomised Controlled Trial with Swayable both to support JUMA with the final stages of their campaign development (testing near-final content) and in the evaluation (using opinion shift data). Although tests like this are conducted in artificial or controlled settings, they can nonetheless provide useful data on audience responses and messaging effects. Combined with data from the live roll-out, they help form a more complete picture of campaign impact. We are grateful to Jenni Winterhagen (at that time a Manager at Syspons) for sharing this insight during the JUMA evaluation design process, so that early on we realised that Swayable could be both a useful test and evidence of potential impact on audience opinions.

During the live campaign, ICPA worked alongside the JUMA team to collect campaign performance data using the monitoring methods.

Step 3: Reflect on the results in comparison to campaign targets

After the campaign finished, ICPA and JUMA developed short reports for discussion and reflected together on the results.

In a group reflection session, we asked ourselves the following questions and reflected on the following findings:

| Evaluation level | Question | Data sources | Key reflections |

|---|---|---|---|

| Reach | Did the campaign reach enough of the target audience to have an impact? |

Social media monitoring log and Keyhole. We calculated the proportion of the target audience reached by comparing the estimated target audience population size to the reach on social media. |

We found a reach of 5-7%, which was felt to be meaningful but probably not sufficient to change the conversation among the target audience, leading to a discussion about working more with messengers and influencers. |

| Response | Was the response warm, engaged and positive among the target audience? |

Social media monitoring log and Keyhole. Looking at the social media metric of engagement over reach and the proportion of positive reactions, as well as analysing a random sample of comments, we evaluated the extent of positivity. As JUMA could not compare these results with a previous campaign, we used global benchmarks (like these). |

The warm and positive response on social media (around 99% of reactions were positive) and high engagement (average engagement rate of 34% on Facebook video posts) demonstrated good resonance. |

| Response – opinion shift | Did the campaign persuade/move the target audience in their opinions on migration, integration and Muslims in Germany? |

Randomised controlled test with Swayable. This test enabled us to explore the % shift in target audience opinions on key questions caused by watching JUMA’s campaign videos. Swayable benchmarked these results for us against other videos tested on their platform. |

All three of JUMA’s campaign videos moved middle audiences to be more positive about migration and less anxious about the integration of Muslims in German society. They shifted opinions in a positive direction by between 6% and 12%. This ranks in the top 5-10% of all content tested by Swayable, which is an especially impressive result. |

| Response – media | Did journalists who can reach the target audience want to cover the campaign? |

Media monitoring and telephone interviews with journalists. We discussed the lack of media coverage found through the log and qualitative feedback received from journalists regarding the newsworthiness of the campaign materials. |

Noting the lack of coverage of the 2019 campaign, JUMA identified steps that could be taken in future to package campaign messages for news outlets and broadcasters, pitching them in connection to existing events and debates (acting as ‘hooks’ for the story), in order to achieve presence in publications valued by the target audience. |

| Uptake | Did the campaign message get picked up and used by influential people? |

Case study log. We reflected on the lack of uptake logged during the campaign and why this could have been. |

We concluded that the pilot’s limited reach and lack of media coverage made it less likely that it would attract the attention and mimicry of politicians or other opinion leaders. These are element on which to work more proactively in future. |

Reflecting on these questions and results, we were able to assess the overall impact of the campaign and explore lessons learned for the next wave of campaigning.

We were all especially encouraged by the ‘response’ results, which showed that middle audiences really are movable, when reached with messages that tap into their values and achieve a mix of resonance and dissonance. As a pilot, it therefore demonstrated that narrative change campaigning can work in Germany!

The lack of media response was not surprising given that the team had not been able to focus capacity on media partnerships and helped us all learned the lesson that ‘content is not King’ – no matter how high the quality of your content, it will not spread itself. In order to get your messages achieving wide reach and being picked up in the media and ultimately by opinion leaders, you need to actively promote your content, hooking it to current events and debates, and working with authentic messengers and influencers.

Step 4: Share the lessons with the community of activists and campaigners

The final step we took was to share the lessons of the JUMA 2019 campaign evaluation with our wider network of campaigners and activists working on narrative change in the migration debate. Together with JUMA, we hosted a webinar which was attended by activists from 5 European countries.

We wanted to set the agenda that testing, monitoring and evaluating are valuable and essential to communications practice, and also to share findings about narratives that are effective in moving key audiences so that they can be built on by others.

Pooling our knowledge has benefits for the whole movement, allowing us to avoid repeating mistakes, build on successes and increase the collective impact of our work.

Conclusion

Narrative change is sometimes seen as abstract and immeasurable but through this experience with JUMA and working with other international practitioners we have seen that it is possible to identify and measure metrics for success. There are a range of methods available to activists and campaigners, with different price-tags, making it possible for even small organisations to monitor and evaluate. The key is to start with clearly-defined campaign targets and build your monitoring & evaluation plan from there. By tracking your impact you will build the basis for your future communications strategy and help others in the wider network do the same.